Simon Bains is our chair today and he's introducing our tutorial from Talat and Stephanie from UKOLN.

Talat Chaudri (UKOLN) - Building application profiles in practice: agile development and usability testing (2 hours)

Talat is talking about a specific set of application profiles funded by the JISC, particularly Dublin Core profiles. These were funded but have not been taken up as expected so Talat's post has been created to find out why these profiles are not in more use. For instance:

- Practical usability testing (JISC DCAPS: SWAP, GAP, IAP, TBMAP, LMAP, SDAP) - some of these are broad (and possibly unwieldy) and some are extremely specialized profiles.

- There is a sense that JISC built these profiles and they should be in use. Talat feels what we don't want to do next here is to build untested, preconcieved ideas of user requirements - we should consult users instead!

- Substantiate need for complex metadata structures/terms: convince developers.

- All outcomes possible (minor alterations, radical reformulation, status quo). We may learn that what was done isn't perfect. They may be great and have certainly been created by specialists but it may be that changes are required to make the profiles more useful. The profiles may be good but we may need tools for implementation.

We know that different institutions work differently and may implement these things differently so we need

- Start where we can do most (and feedback is most immediately available). We are starting with SWAP as we have the most experience there but the other profiles are also based on SWAP so it's a good starting point. It's not that it's the most important profile.

- Iterative development of methodology (different per resource/DCAP?). We may not get things right the first time. We'll do this like software design: deliver, test, take feedback, make tweaks etc. I don't think it's an approach that's really been taken with metadata before.

- Work with what we have (DCAPs as they currently stand, not further hypothesis).

- Test interfaces and paper prototyping. We'll be doing some of this today with the Card Sorting method.

- Include everybody.

- Clearer idea of what APs do for real users (repositories, VREs, VLEs etc). The idea is to take bits of metadata schema from different places and tailors them for a specific purpose.

- Methodology for building APs that work (how to make them better in future). We build an application from scratch - what's the best way to do that again. And what is the process for assessing and improving an AP? We need to capture this too.

- Toolkit approach to facilitating APs for which there is a demonstrated use. We want to test different parts separately and to see what people really want to do and how they really want to use the APs.

- Show the community how to do it! This is part of our role. We can't just through APs out there we have to show people how they use these APs and relate it to their work.

What are we Testing and Why?

- Metadata elements that are useful (what you need to say and what for). This is probably the most important issue.

- Relationships between digital objects (what you need to find next). You want useful connections but you don't need absolutely every single connection. What do users need. This is a jump off point from Google really. Making a key connection to related items or citations etc. might be most useful. There are other connections you could make that may have no value for users.

- Structure (what related things have in common e.g. article & teaching slides). What is the same? Effectively this is about entities. They are about sections of metadata. If things have a relationship what is it?

- Don't build what you don't need. If users don't want or need it you shouldn't develop it.

What do you want and need from this data?

Event Type: Workshop

- Workshop Location

- Workshop Type

- Workshop Date

- Opinion of Workshop {boring; interesting} etc.

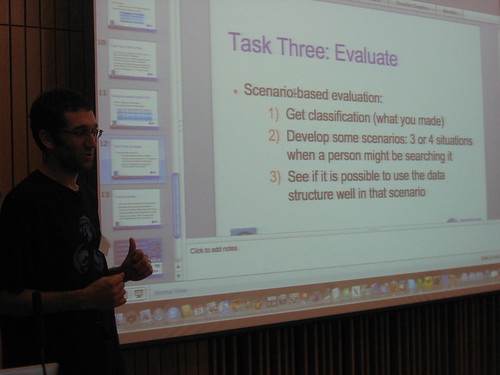

Task: Free-listing, card sorting and user testing a data model

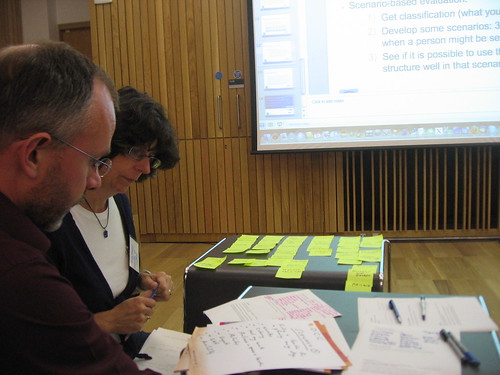

We split into groups and, as this was hands on, I'll just tell you the highlights of the task:

Each group selected a type of thing to build a data model for. Our group picked "Room Type" - basically a room as a resource that requires a data model.

Each group selected a type of thing to build a data model for. Our group picked "Room Type" - basically a room as a resource that requires a data model.- Then we individually listed all the properties of that resource type for a few minutes.

- Then we pooled all our ideas and wrote the agreed terms on post it notes - one for each quality.

- Then we sorted those post its into groups/clusters. In our case this was tricky. Where did location fit? Where did price fit? What were our top level categories? We were thinking of the model as relating to a faceted browse of a website...

- Then we created usage scenarios for our data model. This immediately raised a few qualities we hadn't thought of in our model.

Next the groups each switched a member so that an outsider to the data model could run through one of the scenarios and spot the problems. We immediately found that our outsider was looking at the discovery of a room for booking from completely different priorities/qualities. This meant our top level qualities probably needed a rethink. But of course this was only one user. We'd need to test the same model with lots of users to know what works and what doesn't. Additionally we were thinking of our model as relating to websites but was the model the website structure or the data structure? We weren't sure...

Next the groups each switched a member so that an outsider to the data model could run through one of the scenarios and spot the problems. We immediately found that our outsider was looking at the discovery of a room for booking from completely different priorities/qualities. This meant our top level qualities probably needed a rethink. But of course this was only one user. We'd need to test the same model with lots of users to know what works and what doesn't. Additionally we were thinking of our model as relating to websites but was the model the website structure or the data structure? We weren't sure...

Final Comments from Talat

Final Comments from Talat- User level view of things should be simplified.

- Developer will see structural complexity. You need to know why it's useful to use a data structure.

- Good documentation makes happy developers. And happy users. It's important to be clear about what you can use this model for. Showing and telling the models really helps.

- Test early, test often. Card if cheaper than code! People need to know what it's for! Things can't be perfect for everyone but you cannot foresee things in theory. And metadata needs to be a flexible living structure.

After this session we have a little coffee break (and such edible perks as micro eclairs!) and then back for the next tutorial:

Richard Jones, Symplectic: “AtomPub, SWORD and how they fit the repository: going beyond deposit”

This will be about how to create tools that work over many repositories. The relationships between dynamic and static systems. A bit about SWORD, AtomPub and how they are used. Richard will also be talking about Repository Object Models and their equivelences across platforms as well as Repository workflows and their equivelences across platforms.

How do we provide deposit tools that are usable and simple for depositors. And, from the institutional perspective, what the bigger picture is and how data is deposited and reused.

In this tutorial we'll look at how the static meets and clashes with the dynamic. Scholarly publications are not completely static but repositories expect static content. There is a notion of the golden record of a piece of research. There is no sense of version management other than through metadata notes (nothing like Subversion which is often used for version control in developments).

Richard is showing us an Architectural overview of solution provided by Symplectic. It is a matter of combining lots of flexible repository tools and interfaces provided by Symplectic that sits on the repository that the university runs.

Richard is showing us an Architectural overview of solution provided by Symplectic. It is a matter of combining lots of flexible repository tools and interfaces provided by Symplectic that sits on the repository that the university runs.

Now we're having a nice demo of Richard depositing materials as an academic (on the left hand screen) and viewing them in DSpace as a repository manager (on the right hand screen): Interestingly Richard's demo with a single user account for an institution rather than each academic needing an account for the repository is increasingly common. Each academic has a uun for the university system so the symplectic part of the system just slots into their whole academic login/homepage. There is a real time relationship between the DSpace repository and the Symplectic tool in the academic's homepage/webpage management space.

What is SWORD?

The key thing Richard would point out is that SWORD has an error handling specification. It's really for create only and package deposit. There is a requirement to retrieve a URI and that can be something that allows you to access things more flexibly (through AtomPub say).

"AtomPub is an application-level protocol for publishing and editing web resources"

- It is a RESTful protocol - no need for a grand web service frameoworl and HTTP requests and

- The spec for AtomPUb allows more flexible create,replace, modify and delete

- All communications in Atom format - designed for web type content not binary type content.

- HTTP POST in AtomPub is designed to allow an Atom feed document to be published ay a specific end point.

So Publications does a POST with SWORD Headers Pub ATOM feed file content to the repository. Then repository return an atom feed and Publications provides a feed.

So Publications does a POST with SWORD Headers Pub ATOM feed file content to the repository. Then repository return an atom feed and Publications provides a feed.It's interesting to get into how repositories describe their deposits. items (DSpace) are eprints (EPrints) are objects (Fedora) are a feed in Atom. All the API calls need to respect the different naming used here. No real equivalent of bundle or document for Fedora or Atom but they can be added to bitstream/entries. This may be a ubiquitous structure.

Repositories aren't just structured but also workflows (Richard is referring back to his "Machu Picchu" session yesterday). Terms for each part of the process are named differently. The work-flow is flexible until the archive stage when you are expected to reach a static stage.

So if you wanting a dynamic system sitting on a static system how do you get the feedback you need? There are different stages you can ask about changes - in this stage at the archive stage there is no ability to modify. You have to create a clone copy to work on. Richard will now demo this. For instance if a preprint was deposited and you want to update it with the print or post print version. You can revoke the right to publish on something already in the repository and that kicks it back to the workflow which mean you can then replace the copy, change it, etc. Academics can add and remove files in Publications as they like. New items are created in the repository. In the archive there is a versioning system that allows references forward and backward in the repository. So you replace and update clones, items never leave the repository. There are some wrinkles - especially to do with level of change. You can have minor changes that do not trigger a review process of the deposited item, or major changes can trigger a re-review of the item. This is a matter of configuration. However the jist is that you create linked duplicates - marked up in the metadata. You can choose to delete items or directly edit metadata but the automatic processes creates multiple clones. EPrints has a version control structure already and ideally that is what you would want in repositories in general.

Additional note: the academic workspace includes a publishers policy area but as many organizations want no barriers to deposit this is often ticked by default or set to be a question mark to indicate that items can be deposited OR that it is not known whether or not items can be deposited.

Deduplication we're allowing an item to have multiple parents so that here is automatic process for many depositors putting in the same item to the same repository.

Fire and forget deposit is not sufficient to interact with the full ingest life-cycle of an average repository system. SWORD and AtomPub offer most of what you need for a deposit life-cycle environment. ATOM can be used effectively to describe content going in and out of repositories. Repositories have analogous content models of and ingest processes. De-duplication of items is very much non-trivial.

Q & A

Q: How does this work for depositing for multiple repositories?

A: It does not support multiple simultaneous deposits yet. You can pick between different ones to deposit to though. We want everything else to work first. We hope to have it working for all three systems mentioned (DSpace, Fedora, EPrints) soon. After that we want to look to deposit in multiple places. We need to crack policy and process issues there though as different repositories have different agreements etc.

Q: The license in Publications, where is it pulled from? Are there different licenses for different collections?

A: It's not pulled exactly yet. We're moving to a choice of licenses or combination of licenses. Different licenses for different collections isn't possible yet. We provide a collection mapper to put content into a given collection at the moment. There is also a more complex tool to map collections to who may wish to deposit in them. It is very complex to set up and local specific so it's not part of the standard Symplectic offering.

Richard concluded by welcoming comments and questions over lunch. Off to grab some food for me too...

No comments:

Post a Comment